Reserve, neither: Rvest download file with password

| Rvest download file with password | How do i update a downloaded driver |

| Rvest download file with password | Download one for the money movie free online |

| Rvest download file with password | Css garys mod downloads |

| Rvest download file with password | How to download youtube videos from chrome browser |

| Rvest download file with password | Free download Corazon |

Web Scraping in R: rvest Tutorial

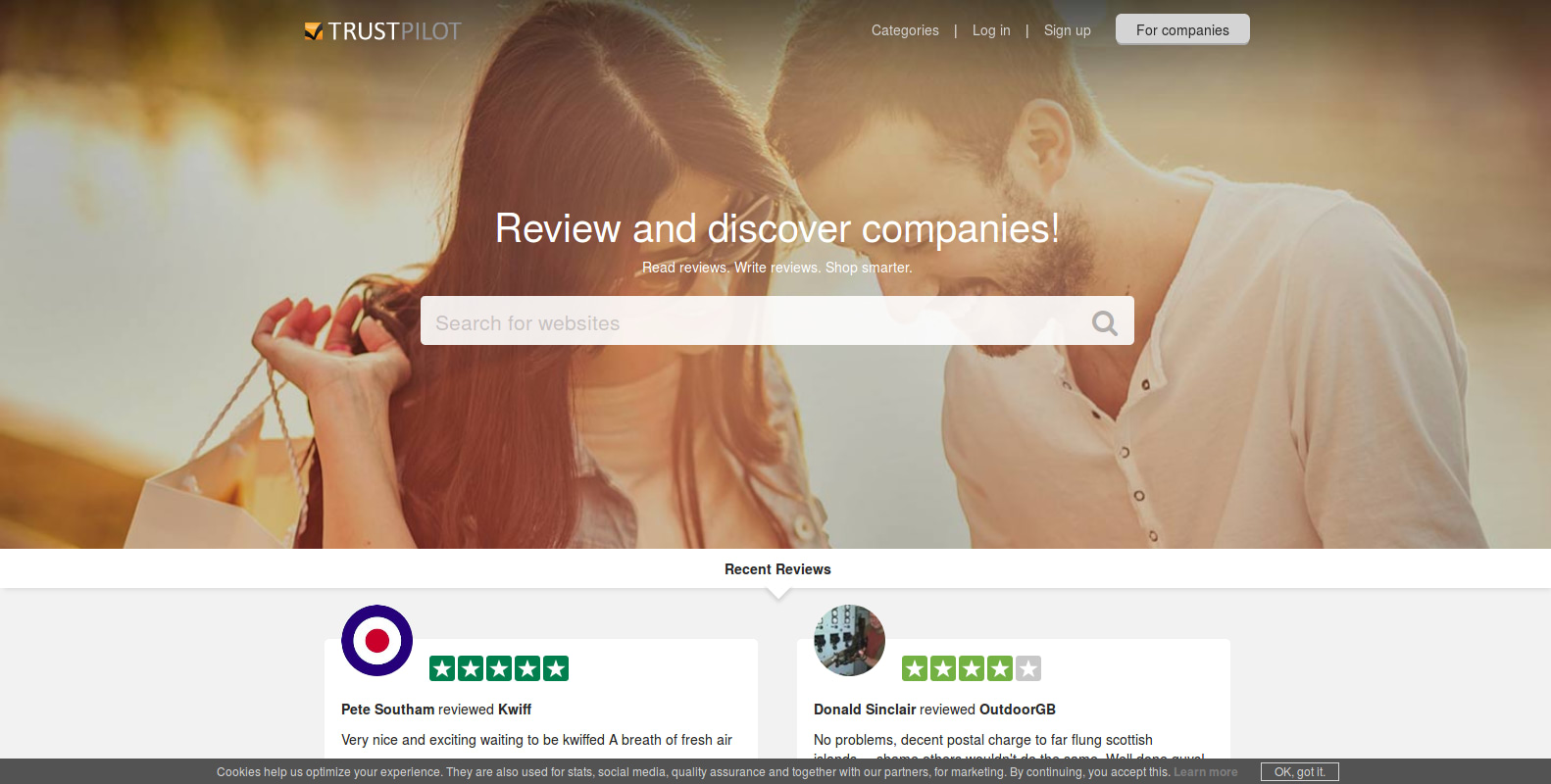

Trustpilot has become a popular website for customers to review businesses and services. In this short tutorial, you'll learn how to scrape useful information off this website and generate some basic insights from it with the help of R. You will find that TrustPilot might not be as trustworthy as advertised.

More specifically, this tutorial will cover the following:

- You'll first learn how you can scrape Trustpilot to gather reviews;

- Then, you'll see some basic techniques to extract information off of one page: you'll extract the review text, rating, name of the author and time of submission of all the reviews on a subpage.

- With these tools at hand, you're ready to step up your game and compare the reviews of two companies (of your own choice): you'll see how you can make use of tidyverse packages such as and , in combination with , to inspect the data further and to formulate a hypothesis, which you can further investigate with the package, a package for statistical inference that follows the philosophy of the tidyverse.

On Trustpilot a review consists of a short description of the service, a 5-star rating, a user name and the time the post was made.

Your goal is to write a function in that will extract this information for any company you choose.

Create a Scraping Function

First, you will need to load all the libraries for this task.

Find All Pages

As an example, you can choose the e-commerce company Amazon. This is purely for demonstration purposes and is in no way related to the case study that you'll cover in the second half of the tutorial.

The landing page URL will be the identifier for a company, so you will store it as a variable.

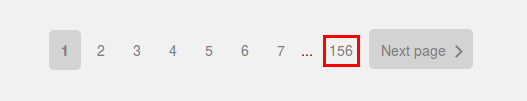

Most large companies have several review pages. On Amazon's landing page you can read off the number of pages, here it is 155.

Clicking on any one of the subpages reveals a pattern for how the individual URLs of a company can be addressed. Each of them is the main URL with added, where is the number of a review page, here any number between 1 and 155.

This is how your program should operate:

- Find the maximum number of pages to be queried

- Generate all the subpages that make up the reviews

- Scrape the information from each of them

- Combine the information into one comprehensive data frame

Let's start with finding the maximum number of pages. Generally, you can inspect the visual elements of a website using web development tools native to your browser. The idea behind this is that all the content of a website, even if dynamically created, is tagged in some way in the source code. These tags are typically sufficient to pinpoint the data you are trying to extract.

Since this is only an introduction, you can take the scenic route and directly look at the source code yourself.

HTML data has the following structure:

To get to the data, you will need some functions of the package. To convert a website into an XML object, you use the function. You need to supply a target URL and the function calls the webserver, collects the data, and parses it. To extract the relevant nodes from the XML object you use , whose argument is the class descriptor, prepended by a to signify that it is a class. The output will be a list of all the nodes found in that way. To extract the tagged data, you need to apply to the nodes you want. For the cases where you need to extract the attributes instead, you apply . This will return a list of the attributes, which you can subset to get to the attribute you want to extract.

Let's apply this in practice. After a right-click on Amazon's landing page you can choose to inspect the source code. You can search for the number '155' to quickly find the relevant section.

You can see that all of the page button information is tagged as class. A function that takes the raw HTML of the landing page and extracts the second to last item of the class looks like this:

The step specific for this function is the application of the function which extracts all nodes of the class. The last part of the function simply takes the correct item of the list, the second to last, and converts it to a numeric value.

To test the function, you can load the starting page using the function and apply the function you just wrote:

Now that you have this number, you can generate a list of all relevant URLs

You can manually check that the entries to are indeed valid and can be called via a web browser.

Extract the Information of One Page

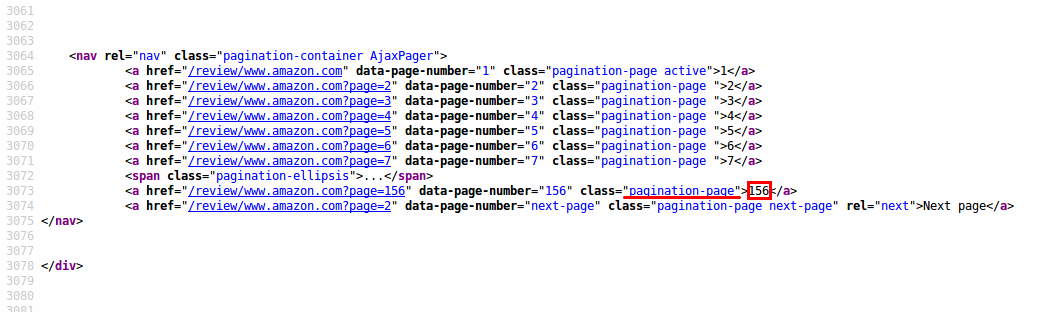

You want to extract the review text, rating, name of the author and time of submission of all the reviews on a subpage. You can repeat the steps from earlier for each of the fields you are looking for.

For each of the data fields you write one extraction function using the tags you observed. At this point a little trial-and-error is needed to get the exact data you want. Sometimes you will find that additional items are tagged, so you have to reduce the output manually.

The datetime information is a little trickier, as it is stored as an attribute.

In general, you look for the most broad description and then try to cut out all redundant information. Because time information not only appears in the reviews, you also have to extract the relevant information and filter by the correct entry.

The last function you need is the extractor of the ratings. You will use regular expressions for pattern matching. The rating is placed as an attribute of the tag. Rather than being just a number, it is part of a string , where is the number you want. Regular expressions can be a bit unwieldy, but the package allows to write them in a nice human readable form. In addition, ' piping functionality, via the operator, allows to decompose complex patterns into simpler subpatterns to structure more complicated regular expressions.

After you have tested that the individual extractor functions work on a single URL, you combine them to create a tibble, which is essentially a data frame, for the whole page. Because you are likely to apply this function to more than one company, you will add a field with the company name. This can be helpful in later analysis when you want to compare different companies.

You wrap this function in a command that extracts the HTML from the URL such that handling becomes more convenient.

In the last step, you apply this function to the list of URLs you generated earlier. To do this, you use the function from the package which is part of the . It applies the same function over the items of a list. You already used the function earlier, however, you passed a number , which is short-hand for extracting the -th sub-item of the list.

Finally, you write one convenient function that takes as input the URL of the landing page of a company and the label you want to give the company. It extracts all reviews, binding them into one tibble. This is also a good starting point for optimising the code. The function applies the function in sequence, but it does not have to. One could apply parallelisation here, such that several CPUs can each get the reviews for a subset of the pages and they are only combined at the end.

You save the result to disk using a tab-separated file, instead of the common comma-separated files (CSVs), as the reviews often contain commas, which may confuse the parser.

As an example you can apply the function to Amazon:

Case Study: A Tale of Two Companies

With the webscraping function from the previous section, you can quickly obtain a lot of data. With this data, many different analyses are possible.

In this case study, you'll only use meta-data of the reviews, namely their rating and the time of the review.

First, you load additional libraries.

The companies you are interested in are both prominent players in the same industry. They both also use their TrustPilot scores as selling points on their website.

You have scraped their data and only need to load it:

You can summarise their overall numbers with the help of the and functions from the package:

| company | count | mean_rating |

|---|---|---|

| company_A | 3628 | 4.908214 |

| company_B | 2615 | 4.852773 |

The average ratings for the two companies look comparable. Company B, which has been in business for a slightly longer period of time, also seems to have a slightly lower rating.

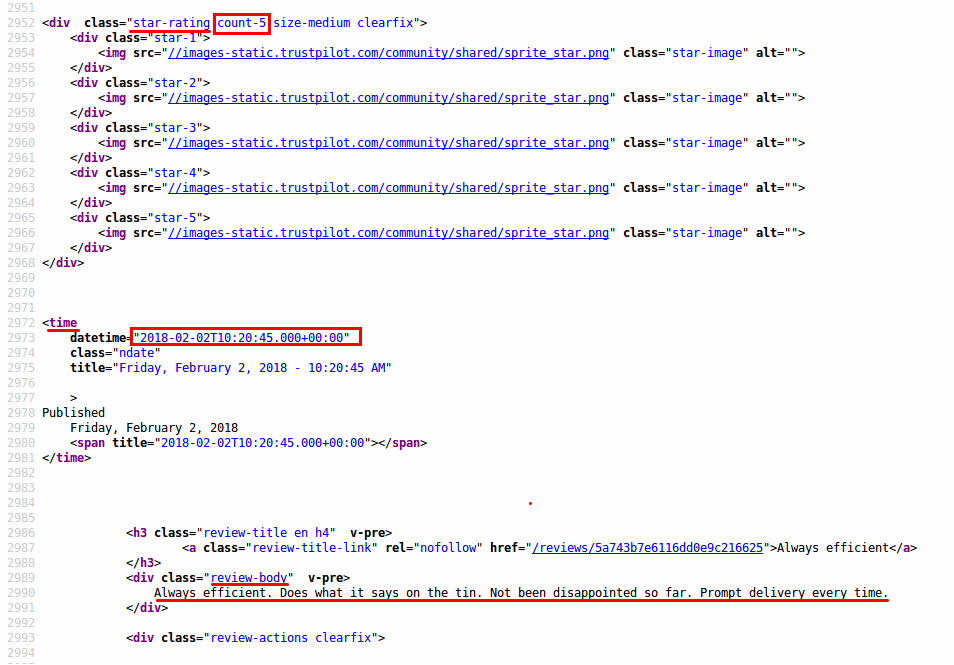

Comparing Time Series

A good starting point for further analysis is to look at how the month-by-month performance by rating was for each company. First, you extract time series from the data and then subset them to a point where both companies were in business and sufficient review activity is generated. This can be found by visualising the time series. If there are very large gaps in the data for several months on end, then conclusions drawn from the data is less reliable.

You can now apply the monthly averaging with the function from the package. To start, gather the mean monthly ratings for each company. Time series can have more than one observation for the same index. To specify that the average is taken with respect to one field, here , we have to pass .

Next, don't forget to pass in to the argument to retrieve the monthly counts. This is because the time series can be seen as a vector. Each review increases the length of that vector by one and the function essentially counts the reviews.

Next, you can compare the monthly ratings and counts. It's very easily done by plotting the monthly average ratings for each company and the counts for those ratings for each company in separate plots and then arranging them in a grid:

It seems company A has much more consistently high ratings. But not only that, for company B, the monthly number of reviews shows very pronounced spikes, especially after a bout of mediocre reviews.

Could there be chance of foul play?

Aggregate the Data Further

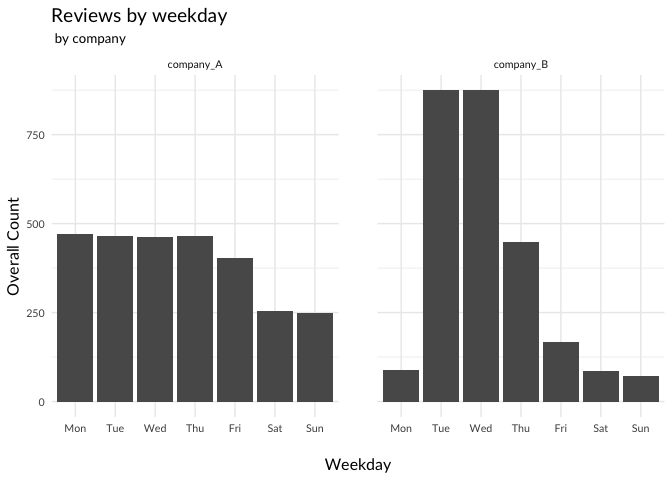

Now that you have seen that the data, especially for company B, changes quite dramatically over time, a natural question to ask how the review activity is distributed within a week or within a day.

If you plot the reviews by week day and hour of the week, you also get remarkable differences:

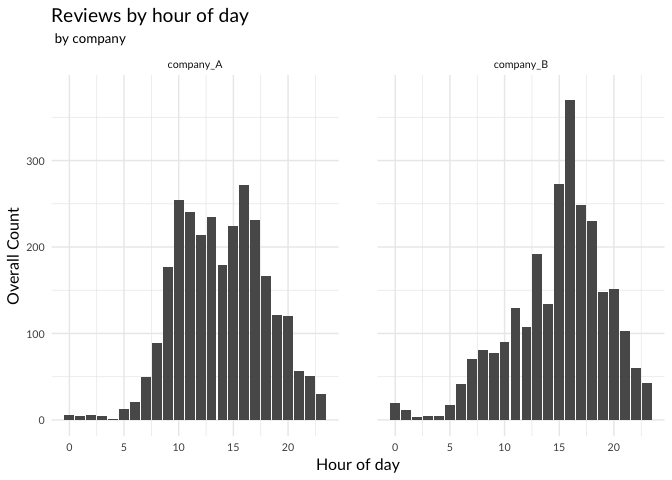

A splitting by time of the day of the reviews also shows striking differences between the two competitors:

It looks as if more reviews are written during the day than at night. Company B however shows a pronounced peak in the reviews written in the afternoon.

The Null Hypothesis

These patterns seem to indicate that there is something fishy going on at company B. Maybe some of the reviews are not written by users, but rather by professionals. You would expect that these reviews are, on average, better than those that are written by ordinary people. Since the review activity for company B is so much higher during weekdays, it seems likely that professionals would write their reviews on one of those days. You can now formulate a null hypothesis which you can try to disprove using the evidence from the data.

There is no systematic difference between reviews written on a working day versus reviews written on a weekend.

To test this, you divide company B's reviews into those that are written on weekdays and those written on weekends, and check their average ratings

| company | weekday | weekend |

|---|---|---|

| company_A | 4.898103 | 4.912176 |

| company_B | 4.864545 | 4.666667 |

It certainly looks like a small enough difference, but is it pure chance?

You can extract the difference in averages:

If there really was no difference between working days and weekends, then you could simply permute the label and the difference between the averages should be of the same order of magnitude. A convenient way to do these sort of 'Hacker Statistics' is provided by the package:

Here you have specified the relation you want to test, your hypothesis of independence and you tell the function to generate 10000 permutations, calculating the difference in mean rating, for each. You can now calculate the frequency of the permutation producing, by chance, such a difference in mean ratings:

Indeed, the chance of the observed effect being pure chance is exceedingly small. This does not prove any misdoing, however it is very suspicious. For example, doing the same experiment for company A gives a p value of 0.561, which means that its very likely to get a value as extreme as the one observed, which certainly does not invalidate your null hypothesis.

Conclusion: Don't Trust the Reviews (Blindly)

In this tutorial, you have written a simple program that allows you to scrape data from the website TrustPilot. The data is structured in a tidy data table and presents an opportunity for a large number of further analyses.

As an example, you scraped information for two companies that work in the same industry. You analysed their meta-data and found suspicious patterns for one. You used hypothesis testing to show that there is a systematic effect of the weekday on one company's ratings. This is an indicator that reviews have been manipulated, as there is no other good explanation of why there should be such a difference. You could not verify this effect for the other company, which however does not mean that their reviews are necessarily honest.

More information on review websites being plagued by fake reviews can be found for example in the Guardian.

-

-

-